There are plenty of good resources explaining the transformer architecture online, but Rotary Position Embedding (RoPE) is often poorly explained or skipped entirely.

RoPE was first introduced in the paper RoFormer: Enhanced Transformer with Rotary Position Embedding, and while the mathematical operations involved are relatively straightforward — primarily rotation matrix and matrix multiplications — the real challenge lies in understanding the intuition behind how it works. I’ll try to provide a way to visualize what it’s doing to vectors and explain why this approach is so effective.

I assume you have a basic understanding of transformers and the attention mechanism throughout this post.

RoPE Intuition

Since transformers lack inherent understanding of order and distances, researchers developed positional embeddings. Here’s what positional embeddings should accomplish:

- Tokens closer to each other should attend with higher weights, while distant tokens should attend with lower weights.

- Position within a sequence shouldn’t matter, i.e. if two words are close to each other, they should attend to each other with higher weights regardless of whether they appear at the beginning or end of a long sequence.

- To accomplish these goals, relative positional embeddings are far more useful than absolute positional embeddings.

Key insight: LLMs should focus on the relative positions between two tokens, which is what truly matters for attention.

If you understand these concepts, you’re already halfway there.

Before RoPE

The original positional embeddings from the seminal paper Attention is All You Need were defined by a closed form equation and then added into the semantic embeddings. Mixing position and semantics signals in the hidden state was not a good idea. Later research confirmed that LLMs were memorizing (overfitting) rather than generalizing positions, causing rapid deterioration when sequence lengths exceeded training data. But using a closed form formula makes sense, it allows us to extend it indefinitely, and RoPE does something similar.

One strategy that proved successful in early deep learning was: when unsure how to compute useful features for a neural network, let the network learn them itself! That’s what models like GPT-3 did — they learned their own position embeddings. However, providing too much freedom increases overfitting risks and, in this case, creates hard limits on context windows (you can’t extend it beyond your trained context window).

The best approaches focused on modifying the attention mechanism so that nearby tokens receive higher attention weights while distant tokens receive lower weights. By isolating the position information into the attention mechanism, it preserves the hidden state and keeps it focused on semantics. These techniques primarily tried to cleverly modify Q and K so their dot products would reflect proximity. Many papers attempted different methods, but RoPE was the one that best solved the problem.

Rotation Intuition

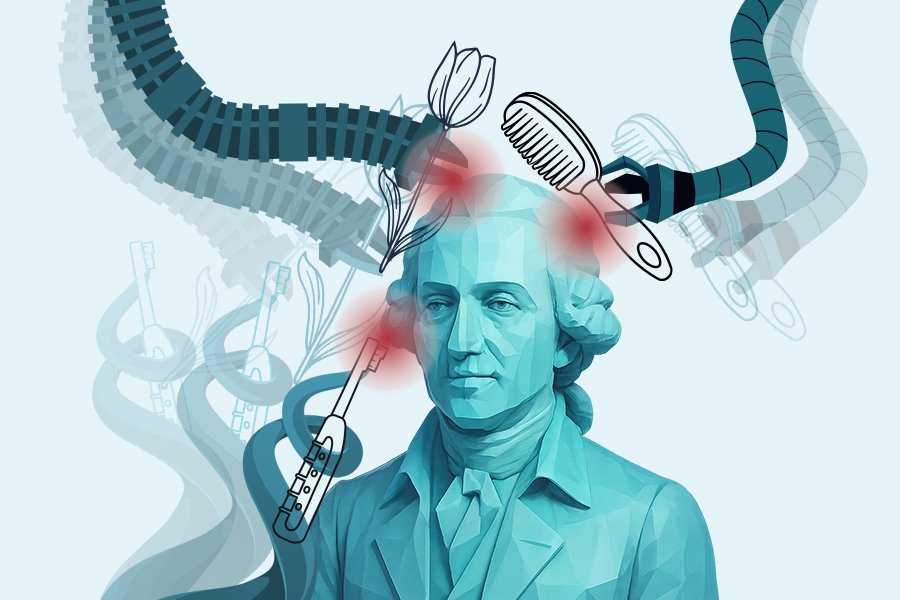

RoPE modifies Q and K by applying rotations to them. One of the nicest properties of rotation is that it preserves vector modules (size), which potentially carries semantic information.

Let q be the query projection of a token and k be the key projection of another. For tokens that are close in the text, minimal rotation is applied, while distant tokens undergo larger rotational transformations.

Imagine two identical projection vectors — any rotation would make them more distant from each other. That’s exactly what we want.

Now, here’s a potentially confusing situation: if two projection vectors are already far apart, rotation might bring them closer together. That’s not what we want! They’re being rotated because they’re distant in the text, so they shouldn’t receive high attention weights. Why does this still work?

- In 2D, there’s only one rotation plane (

xy). You can only rotate clockwise or counterclockwise.

- In 3D, there are infinitely many rotation planes, making it highly unlikely that rotation will bring two vectors closer together.

- Modern models operate in very high-dimensional spaces (10k+ dimensions), making this even more improbable.

Remember: in deep learning, probabilities matter most! It’s acceptable to be occasionally wrong as long as the probabilities are low.

Angle of Rotation

The rotation angle depends on two factors: m and i. Let’s examine each.

Token Absolute Position m

Rotation increases as the token’s absolute position m increases.

I know what you’re thinking: “m is absolute position, but didn’t you say relative positions matter most?”

Here’s the magic: consider a 2D plane where you rotate one vector by 𝛼 and another by β. The angular difference between them becomes 𝛼-β. The absolute values of 𝛼 and β don’t matter, only their difference does. So for two tokens at positions m and n, the rotation modifies the angle between them proportionally to m-n.

For simplicity, we can think that we’re only rotating

q(this is mathematically accurate since we care about final distances, not coordinates).

Hidden State Index i

Instead of applying uniform rotation across all hidden state dimensions, RoPE processes two dimensions at a time, applying different rotation angles to each pair. In other words, it breaks the long vector into multiple pairs that can be rotated in 2D by different angles.

We rotate hidden state dimensions differently — rotation is higher when i is low (vector beginning) and lower when i is high (vector end).

Understanding this operation is straightforward, but understanding why we need it requires more explanation:

- It allows the model to choose what should have shorter or longer ranges of influence.

- Imagine vectors in 3D (

xyz).

- The

xandyaxes represent early dimensions (lowi) that undergo higher rotation. Tokens projected mainly ontoxandymust be very close to attend with high intensity.

- The

zaxis, whereiis higher, rotates less. Tokens projected mainly ontozcan attend even when distant.

xy plane. Two vectors encoding information mainly in z remain close despite rotation (tokens that should attend despite longer distances!)

x and y become very far apart (nearby tokens where one shouldn’t attend to the other).This structure captures complicated nuances in human language — pretty cool, right?

Once again, I know what you’re thinking: “after too much rotation, they start getting close again”.

That’s correct, but here’s why it still works:

- We’re visualizing in 3D, but this actually happens in much higher dimensions.

- Although some dimensions grow closer, others that rotate more slowly continue growing farther apart. Hence the importance of rotating dimensions by different angles.

- RoPE isn’t perfect — due to its rotational nature, local maxima do occur. See the theoretical chart from the original authors:

The theoretical curve has some crazy bumps, but in practice I found it to be much more behaved:

An idea that occurred to me was clipping the rotation angle so the similarity strictly decreases with distance increases. I’ve seen clipping being applied to other techniques, but not to RoPE.

Bare in mind that cosine similarity tends to grow (although slowly) as the distance grows a lot past our base value (later you’ll see exactly what is this base of the formula). A simple solution here is to increase the base, or even let techniques like local or window attention take care of it.

Bottom line: The LLM learns to project long-range and short-range meaning influence in different dimensions of q and k.

Here are some concrete examples of long-range and short-range dependencies:

- The LLM processes Python code where an initial transformation is applied to a dataframe

df. This relevant information should potentially carry over a long range and influence the contextual embedding of downstreamdftokens.

- Adjectives typically characterize nearby nouns. In “A beautiful mountain stretches beyond the valley”, the adjective beautiful specifically describes the mountain, not the valley, so it should primarily affect the mountain embedding.

The Angle Formula

Now that you understand the concepts and have strong intuition, here are the equations. The rotation angle is defined by:

\[\text{angle} = m \times \theta\]

\[\theta = 10,000^{-2(i-1)/d_{model}}\]

mis the token’s absolute position

- i ∈ {1, 2, …, d/2} representing hidden state dimensions, since we process two dimensions at a time we only need to iterate to

d/2rather thand.

dmodelis the hidden state dimension (e.g., 4,096)

Notice that when:

\[i=1 \Rightarrow \theta=1 \quad \text{(high rotation)} \]

\[i=d/2 \Rightarrow \theta \approx 1/10,000 \quad \text{(low rotation)}\]

Conclusion

- We should find clever ways to inject knowledge into LLMs rather than letting them learn everything independently.

- We do this by providing the right operations a neural network needs to process data — attention and convolutions are great examples.

- Closed-form equations can extend indefinitely since you don’t need to learn each position embedding.

- This is why RoPE provides excellent sequence length flexibility.

- The most important property: attention weights decrease as relative distances increase.

- This follows the same intuition as local attention in alternating attention architectures.