[ad_1]

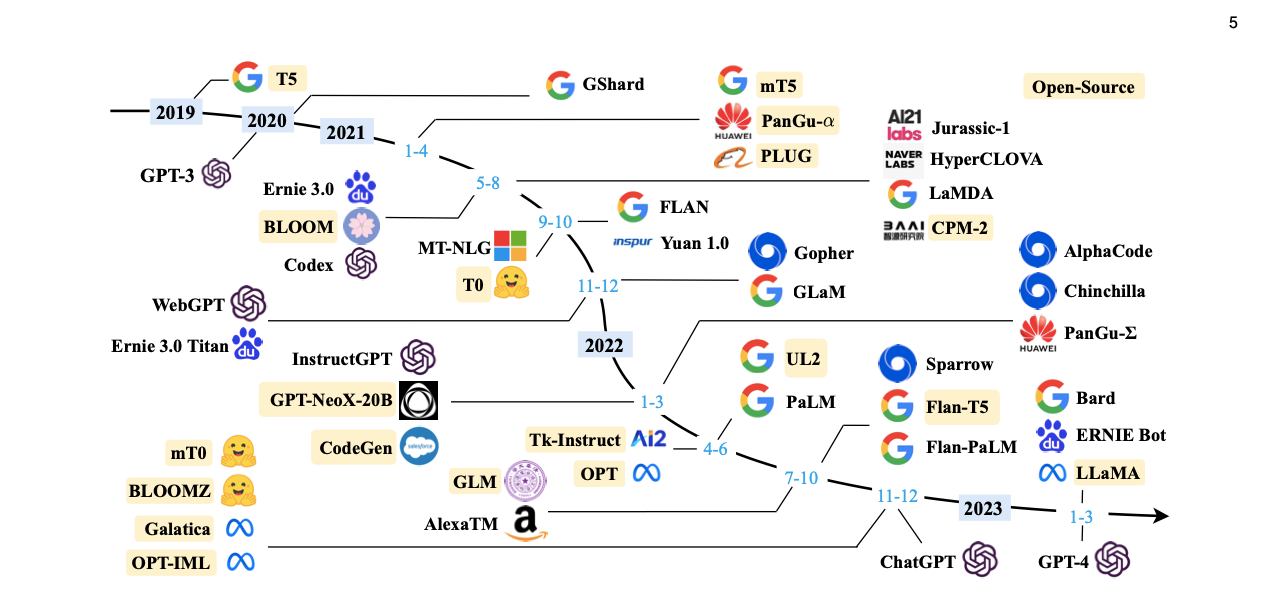

The number of large language models llms has been increasing since 2019 (See Figure 1) due to the models’ extensive application areas and capabilities. Yet, the estimates show that designing a new foundation model can cost up to $90 million while fine-tuning or enhancing existing large language models can cost $1 million to $100 thousand. 1 Such high and volatile costs result from:

- Fixed factors like Hardware costs, training runs and data gathering and labelling

- Varying factors: People and R&D costs or computational costs.

LLMOps can help reduce costs by facilitating operational challenges. However, LLMOps are relatively recent solutions where the market landscape might confuse business leaders and IT teams.

This article briefly explains the LLMOps market and compares available tools.

LLMOps Landscape

There are more than 20 tools that claim to be an LLMOps in the market, which can be evaluated under three categories:

1. ) LLMOps Platforms

Platforms are either designed from the bottom not necessarily designed for LMMOps, but they offer several capabilities that can be useful to train models like large language models. Some of these features are:

- Fine tuning llms

- Versioning and deploying LLMs.

These LLM platforms can offer different levels of flexibility and use of ease:

- No-code LLM platforms: Some of these platforms are no-code and low-code, which facilitate LLM adoption. In this sense, they can be evaluated as LLMOps. However, these tools typically have limited flexibility.

- Code-first platforms: These broad platforms target machine learning process models, including LMMs and foundation models—a combination of high flexibility and easy access to computing for expert users.

Integration frameworks

These tools are built to develop LLM applications easily (.e.g LangChain). These frameworks can offer document analysis, code analysis, and chatbots.

Other tools

These tools streamline a smaller part of the LLM workflow, such as testing prompts, incorporating human feedback (RLHF) or evaluating datasets.

Disclaimer

We are aware that there are different approaches to categorize these tools. For instance, some vendors include other technologies that can help large language model development in this landscape, such as containerization or edge computing. However, such technologies are not built for designing or monitoring models, even though they can be paired with LLMOps tools to improve model performance. Therefore, we excluded these tools.

A more classical approach categorises them based on licence type (e.g. open source or not) and pre-trained or not. Although accurate, this approach cannot explain the crucial differences among LLMOps tools. Besides, the latest research shows that the focus of LLMs and generative AI, in general, is moving towards proprietary from open source.3 Therefore, we did not focus on this aspect in our benchmarking.

In our analysis, we removed integration frameworks and other relevant tools and focused on LLMOps platforms. Following the categorization explained above, we listed and presented the tools:

1. MLOPs platforms

Some MLOps platforms can be deployed as LLMOPs tools like Zen ML. MLOps, or Machine Learning Operations, effectively manages and optimizes the end-to-end machine learning lifecycle.

Compare all MLOPs platforms in our constantly updated vendor list.

2.) LLMs platforms

Some of these platforms and frameworks are built as LLMs, which can offer features of LLM operations tools. Discover such large language models (llms) in our comprehensive list.

3.) LLMOPs platforms

This category includes any tools that exclusively focus on optimizing and managing LLM operations. The table below shows the Github stars, B2B reviews and average B2B score from reputable B2B review pages (Trustradius, Gartner & G2) for some of these LLMOps tools:

| LLMOps Tools | Github Stars | Number of B2B Reviews* | Average Review Score** |

|---|---|---|---|

| Deep Lake | 6,600 | NA | NA |

| Fine-Tuner AI | 6,000 | NA | NA |

| Snorkel AI | 5,500 | NA | NA |

| Zen ML | 3,000 | NA | NA |

| Lamini AI | 2100 | NA | NA |

| Comet | 54 | NA | NA |

| Titan ML | 47 | NA | NA |

| Valohai | Not open source | 20 | 4.9 |

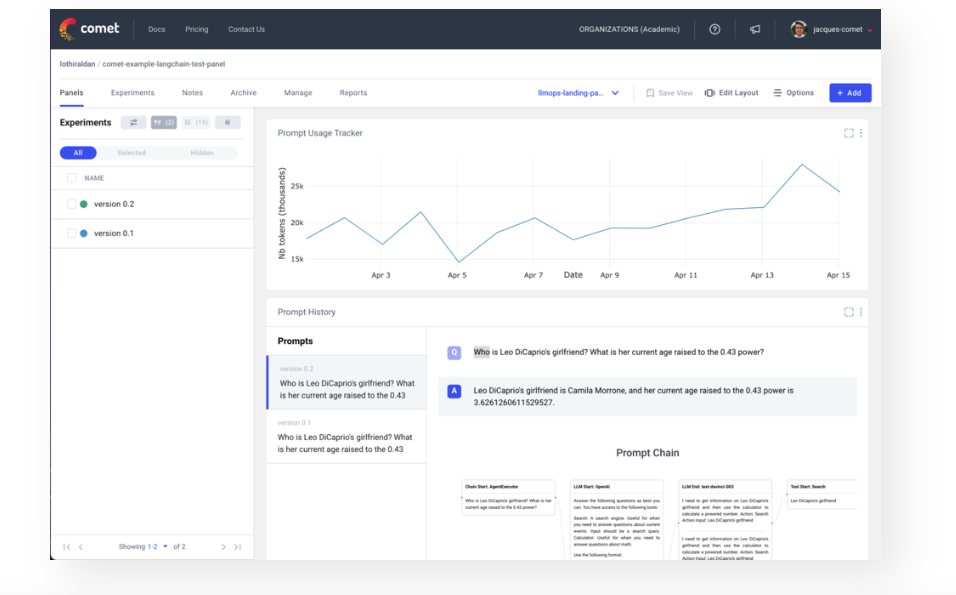

- Comet: Comet streamlines the ML lifecycle, tracking experiments and production models. Suited for large enterprise teams, it offers various deployment strategies. It supports private cloud, hybrid, and on-premise setups.

- Deep Lake: Deep Lake combines the capabilities of Data Lakes and Vector Databases to create, refine, and implement high-quality LLMs and MLOps solutions for businesses. Deep Lake allows users to visualize and manipulate datasets in their browser or Jupyter notebook, swiftly accessing different versions and generating new ones through queries, all compatible with PyTorch and TensorFlow.

- Fine-Turner AI: Fine-turner AI is a no-code platform which can be connected via No-Code Plugins, third-party tools and rest API.It enables creating of AI agents through customizable AI agents without complex technical skills.

- Lamini AI: Lamini AI provides an easy method for training LLMs through both prompt-tuning and base model training. Lamini AI users can write custom code, integrate their own data, and host the resulting LLM on their infrastructure.

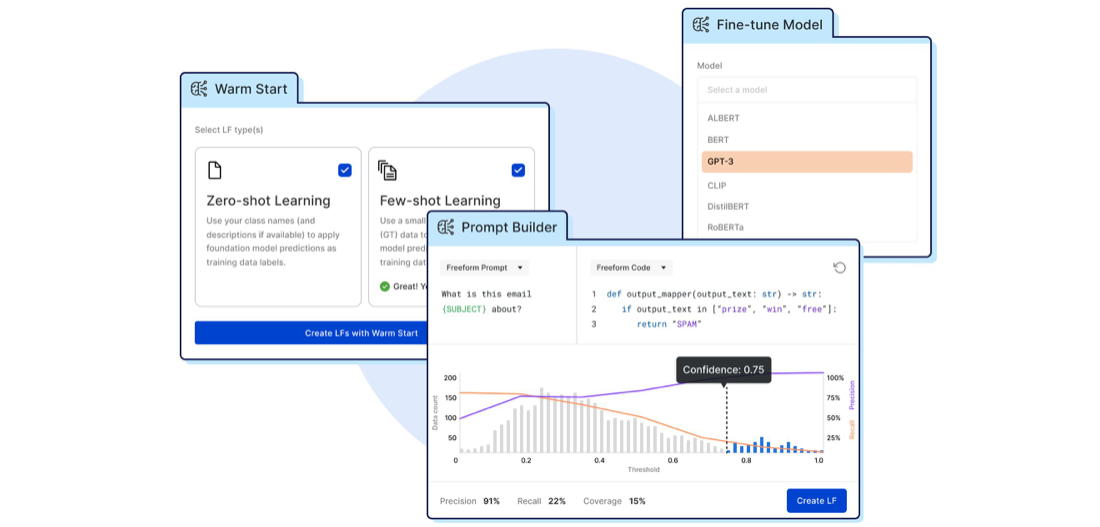

- Snorkel AI: Snorkel AI empowers enterprises to construct or customize foundation models (FMs) and large language models (LLMs) to achieve remarkable precision on domain-specific datasets and use cases. Snorkel AI introduces programmatic labelling, enabling data-centric AI development with automated processes.

6. Titan ML: TitanML is an NLP development platform that aims to allow businesses to swiftly build and implement smaller, more economical deployments of large language models. It offers proprietary, automated, efficient fine-tuning and inference optimization techniques. This way, it allows businesses to create and roll out large language models in-house.

7. Valohai: Valohai streamlines MLOps and LLMs, automating data extraction to model deployment. It can store models, experiments, and artefacts, making monitoring and deployment easier. Valohai creates an efficient workflow from code to deployment, supporting notebooks, scripts, and Git projects.

8. Zen ML: ZenML primarily focuses on machine learning operations (MLOps) and the management of the machine learning workflow, including data preparation, experimentation, and model deployment.

While choosing a tool, there are a few factors you must consider:

- Define goals: Clearly outline your business goals to establish a solid foundation for your LLMOps tool selection process. For example, if your goal requires training a model from scratch vs fine-tuning an existing model, this will have important implications to your LLMOps stack.

- Define requirements: Based on your goal, certain requirements will become more important. For example, if you aim to enable business users to use LLMs, you may want to include no code in your list of requirements.

- Prepare a shortlist: Consider user reviews and feedback to gain insights into real-world experiences with different LLMOps tools. Rely on this market data to prepare a shortlist.

- Compare functionality: Utilize free trials and demos provided by various LLMOps tools to compare their features and functionalities firsthand.

What are LLMOps?

Large Language Models (LLMs) are advanced machine learning models designed to understand and generate human-like text based on the patterns and information they’ve learned from training data. These models are built using deep learning models to capture intricate linguistic nuances and context.

LLMOps refer to techniques and tools used for the operational model management of LLMs in production environments.

Further reading

Explore more on LLMs, MLOps and AIOps by checking out our articles:

External sources

- “The CEO’s Roadmap on Generative AI.” BCG. March 2023. Revisited August 11, 2023.

- “A Survey of Large Language Models.” Github. March 2023. Revisited August 11, 2023.

- “The CEO’s Roadmap on Generative AI.” BCG. March 2023. Revisited August 11, 2023.

- “Debugging Large Language Models with Comet LLMOps Tools.” Comet. Revisited August 16, 2023.

- Harvey, N(March 20, 2023). “Snorkel Flow Spring 2023: warm starts and foundation models.” SnorkelAI. Revisited August 16, 2023.

Stay up-to-date on B2B Tech

Source link