[ad_1]

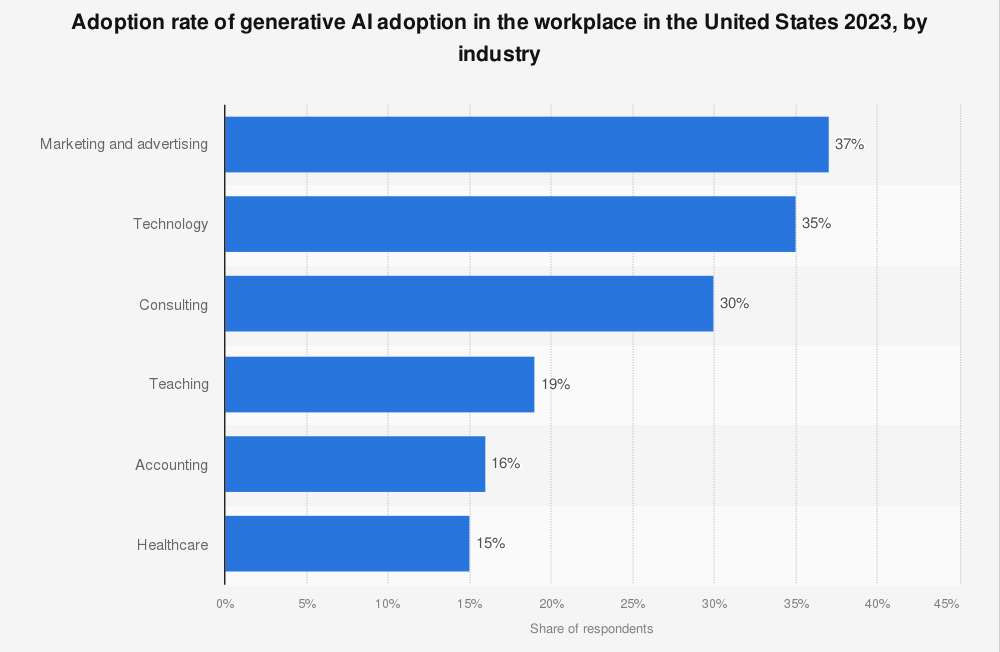

As we witness the digital transformation of industries, generative AI is rapidly carving its niche in the global AI market (Figure 1). It drives creating unique, high-quality content, simulating human language, designing innovative product prototypes, and even composing music.

However, to unleash the true potential of generative AI, there’s a need for vast, diverse, and relevant data to train its models. This requirement challenges developers and business leaders alike, as collecting and preparing this data can be quite difficult.

This article explores generative AI data, its importance, and some methods for collecting relevant training data.

Figure 1. Adoption of generative AI

What is generative AI data?

Generative AI data refers to the vast corpus of information used to train large language models. This data can include text, images, audio, or video. The models learn patterns from this data, enabling them to generate new content matching the input data’s complexity, style, and structure.

The importance of private data in generative AI

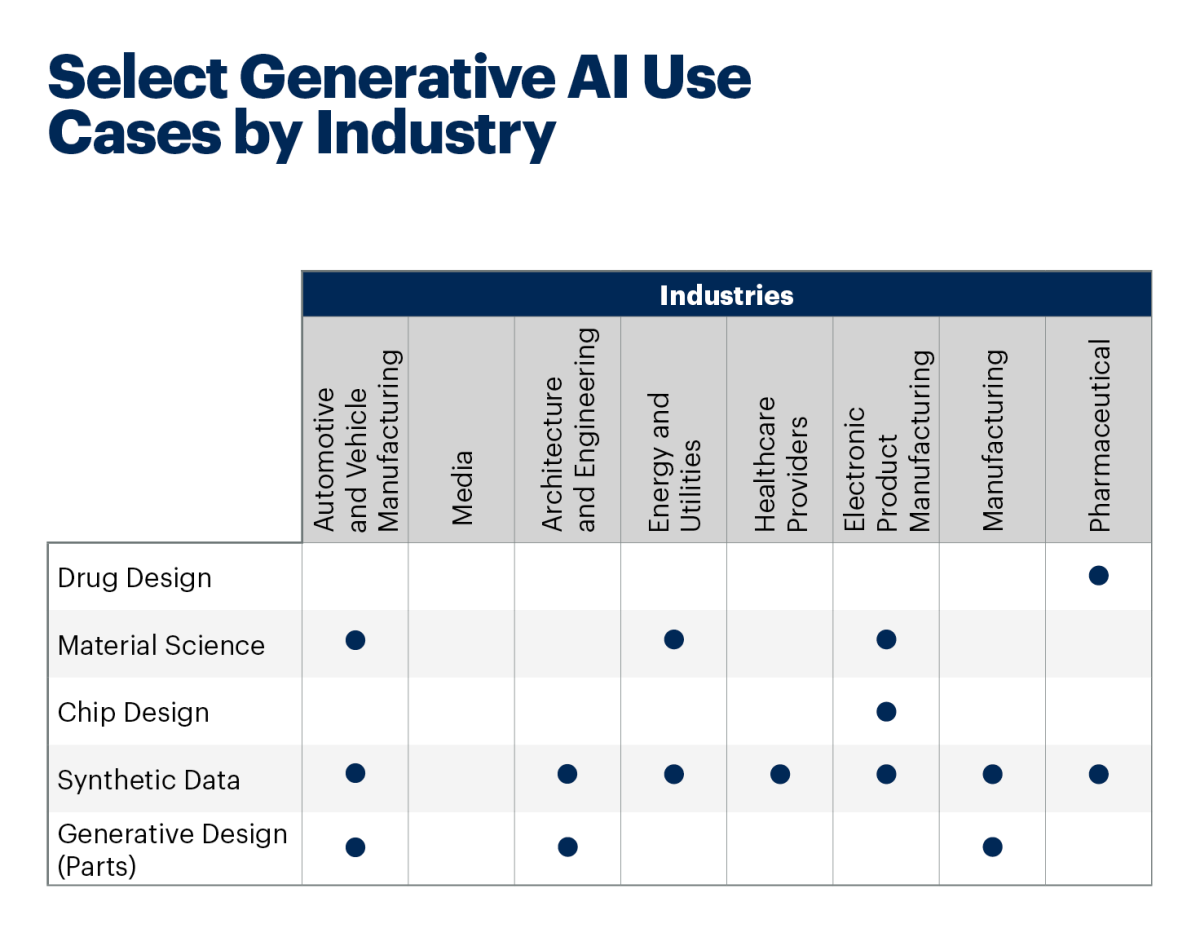

Ever since the launch of OpenAI’s chatGPT, generative AI tech has taken the tech world by storm. Business leaders are optimistic about the applications of generative AI in different areas (Figure 2).

A key aspect of generative AI’s success lies in its ability to offer contextually accurate and relevant output. To achieve this, the quality of the input data is crucial. Private data, which is specific, tailored, and often proprietary, can significantly enhance the performance of generative AI models.

For instance, Bloomberg developed BloombergGPT1, a language model trained on their private financial data. This model outperformed generic models in finance-related tasks, showcasing how targeted, industry-specific data can create a competitive edge in the generative AI space.

Figure 2. Generative AI use cases

7 Methods of collecting data for generative AI

When training large language models (LLMs), data acquisition is often the first hurdle. Below are some methods developers can utilize:

1. Crowdsourcing

Crowdsourcing involves obtaining data from a large group of people, usually through the internet. This method can provide diverse, high-quality data. Imagine training a conversational AI model. You could crowdsource conversational data from users around the world, enabling the model to understand and generate dialogue in various languages and styles.

However, crowdsourcing requires the development of an online platform that helps the company hire and manage the crowd which gathers the data. Working with a crowdsourcing service provider can be a more efficient way of leveraging this approach to preparing quality datasets for generative AI training.

Sponsored

Clickworker focuses on AI data generation and dataset preparation through a crowdsourcing platform. The company’s global team of over 4.5 million workers helps 4 out of 5 tech giants in the US with their data needs. Clickworker’s scalable data services can help train and improve complex generative AI models with human-generated data.

2. Web crawling and scraping

Web crawling and scraping involve the automated extraction of data from the internet. For example, a generative AI model focusing on news generation might use a crawler to gather articles from various news websites.

You can also check our data-driven list of web scraping and crawling tools to find the most suitable option for your business.

3. Synthetic data generation

With the advent of powerful generative AI models, synthetic data generation is gaining traction. In this approach, one generative AI model creates synthetic data to train another. For instance, a generative AI model could create fictional customer interactions to train a customer service AI model. This approach can provide a vast amount of relevant, diverse data without infringing on privacy rights.

Generative adversarial networks (GAN) can also be used to create synthetic data. Click here to read about it.

4. Public datasets

Many organizations and individuals make datasets publicly available for research and development purposes, and these datasets can be used to train generative AI systems. These can include datasets of:

- Text: These are often used to train large language models such as GPT-3

- Images: These datasets are usually used to train text-to-image models such as Dall-e by OpenAI.

- Audio: This data is typically used for tasks such as speech synthesis, music generation, or sound effect generation. A popular example is WaveNet by DeepMind.

- Video: Generative AI systems that use video data are usually focused on tasks such as video synthesis, video prediction, or video-to-video translation.

Some examples of public datasets include:

- Wikipedia dumps for text

- ImageNet for images

- LibriSpeech for audio

- Books

- News articles

- Scientific journals

5. User-generated content

Platforms like social media sites, blogs, and forums are full of user-generated content that can be used as training data, subject to appropriate privacy and usage considerations. However, famous platforms such as Reddit2 no longer provide free data for companies training generative AI tools.

6. Data augmentation

Existing data can be modified or combined to create new data. This approach is called data augmentation and can be used to prepare datasets for training generative AI models. For example, images can be rotated, scaled, or otherwise transformed, while text data can be synthesized by substituting, deleting, or reordering words.

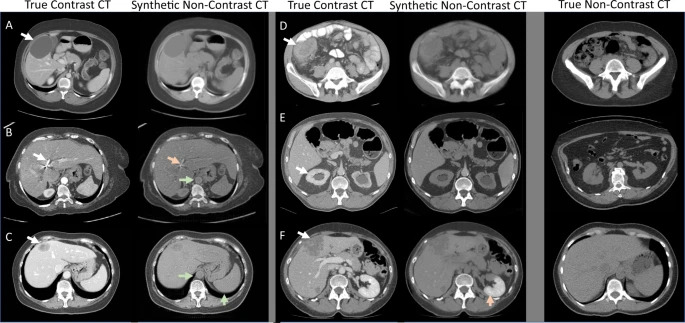

Studies (Figure 3) show the use of generative adversarial networks (GAN) to augment data of brain CT scans.

Figure 3. Data augmentation using CycleGAN

7. Customer data

Proprietary data, such as customer call logs, can also be used to train large language models, particularly for tasks related to customer services, such as automated response generation, sentiment analysis, or intent recognition. However, some important factors must be considered while using this data:

- Transcription: Call logs, usually audio, need transcription into text for training text-based models like GPT-3 or GPT-4.

- Privacy: Ensure call logs are anonymized and comply with privacy laws and regulations, possibly requiring explicit customer consent.

- Bias: Call logs may contain biases, potentially affecting model performance on different types of calls or times.

- Data cleaning: Call logs require cleaning to remove noise such as irrelevant conversation, background noise, or transcription errors.

Conclusion

The importance of high-quality data cannot be overstated for developing generative AI systems. The right data can greatly enhance a model’s performance, driving innovation and offering a competitive edge in the market.

By exploring the data collection methods identified in this article, developers and business leaders can navigate the complexities of generative AI data.

As generative AI continues to evolve, the focus on data will only intensify. Therefore, it’s essential to stay informed and adapt, ensuring that your generative AI models are not just data-rich, but also data-smart.

To learn more about AI data collection, download our free data collection whitepaper:

Get Data Collection Whitepaper

Further reading

If you need help finding a vendor or have any questions, feel free to contact us:

Find the Right Vendors

References

- (March 30, 2023) ‘Introducing BloombergGPT, Bloomberg’s 50-billion parameter large language model, purpose-built from scratch for finance.’ Bloomberg. Accessed. 16/May/2023

- (April 18, 2023). ‘Creating a Healthy Ecosystem for Reddit Data and Reddit Data API Access.’ Reddit. Accessed. 16/May/2023

Source link